-

Search

Search

Experiential

Computing

Blog

Whether You Want to Work from Home, or Study, or Play, a Smart Screen Will Be Right Up Your Alley

Sep 02, 2022

By Sharmin Farah

Put simply, a smart screen is a battery-powered, touch-screen device that connects, shares, and interacts with other devices. It is also known as a wireless monitor and is used for activities that require little or no keyboard involvement. It is a relatively low-cost and simple way of connecting web apps, video conferencing calls, and sharing a workspace with built-in Wi-Fi connectivity.

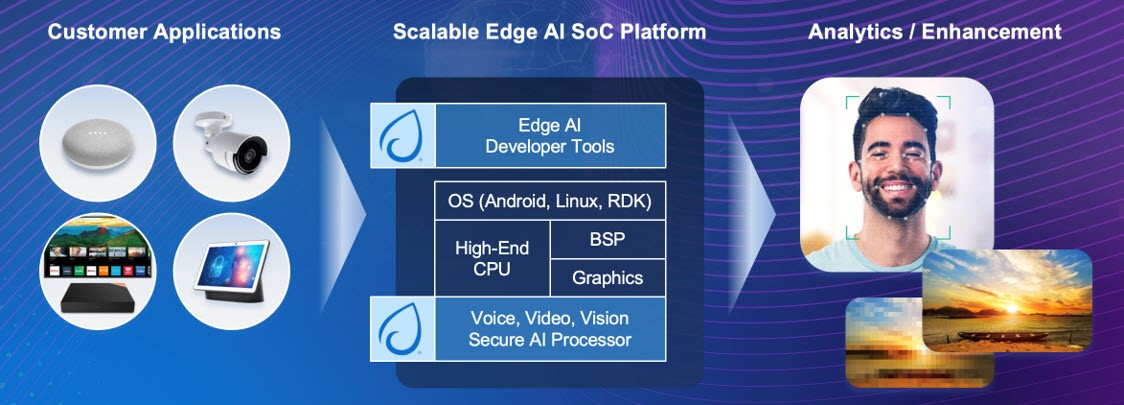

Smart screens have become an exciting innovation platform for consumer, industrial, and enterprise client computing. They started gaining popularity during the COVID 19 pandemic when people started to work from home. As outlined in a recent Synaptics article, by combining artificial intelligence (AI), wireless connectivity, touch, sound, and vision sensing, scalable and secure processing, and advanced display drivers and algorithms, smart screens are capable of fully immersive, context-aware user experiences that are turning them into a multi-functional control points from which to securely interact with a broad range of devices. This article delves into the specifics of how hardware and software are evolving and working together to enable next-generation smart displays by explaining how Visidon software running on Synaptics’ VS680 multimedia SoC enhances smart screen capabilities and supports a variety of use cases.

Though definitions may vary, the rise of smart displays as central hubs across residential, commercial, and industrial applications have been well documented. Research and Markets, for example, expect the market to reach $16.16 billion by 2027, representing a CAGR of 22.56% from $4.79 billion in 2021. The growing number of internet users, innovative features of smart mirrors, and rising demand for AI-driven and IoT-enabled smart home appliances would be likely to impact the market growth positively.

However, for smart displays to reach their full potential, the underlying hardware must achieve critical performance, robust features, security, cost, and efficiency requirements, while the algorithms must be efficient, intuitive, seamless, and able to fully leverage the hardware’s capabilities. This requires close collaboration and advanced, easy-to-use development tools.

Synaptics and Visidon are working together to provide a full solution that enhances the user smart display experience with Synaptics’ VS680 mutimedia SoC (hardware) and Visidon’s algorithms (software). This article dives deeper into two Visidon algorithm groups, such as face analysis and depth compute, which are ported to and optimized for Synaptics’ VS680 to address different use cases.

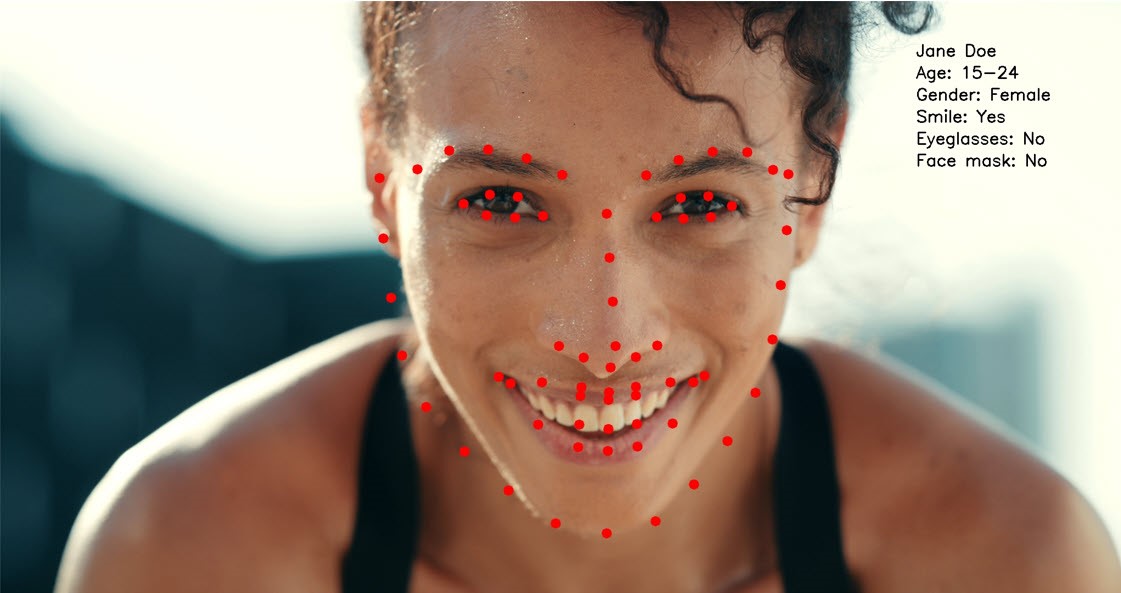

Visidon face analysis algorithms include face detection, tracking and recognition, age, gender and smile analysis as well as eyeglass and face mask detection. These algorithms enable smart screen access control and content personalization. For example, face recognition is useful for organizing photos, securing devices such as laptops and phones, and assisting the blind and low-vision communities.

For smart screen video conferencing and communication enhancement, Visidon algorithms carry out depth compute and portrait segmentation and offer a variety of entertaining filters. One of the filters is bokeh which helps to focus on the foreground object by providing clear and sharp edges on the foreground and gradual blur of the background. Also, video conferencing can be further enriched by Visidon’s sketch, black & white, retro or cartoon effects for a more entertaining communication.

In addition to video conferencing enhancements, depth estimate algorithms are used in augmented reality applications, such as assisted sports, virtual wardrobe, and interactive gaming.

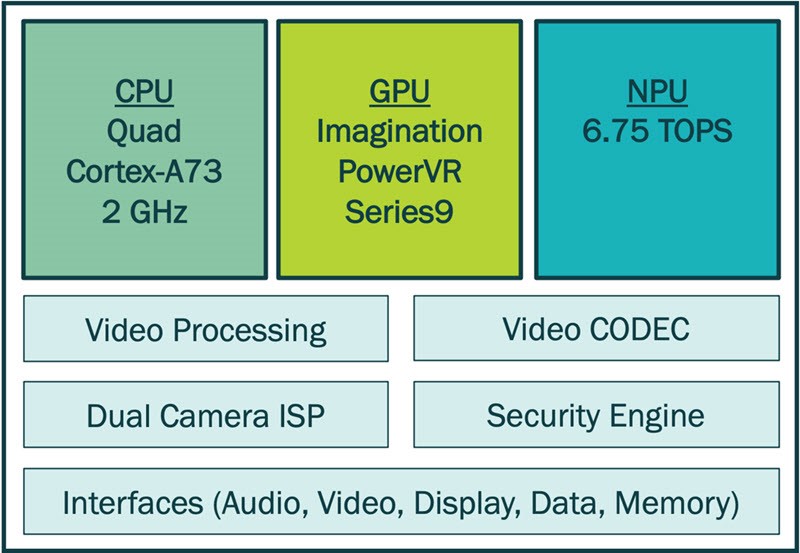

Visidon face analysis and depth compute algorithms are built from different components implemented on the VS680’s NPU, CPU, and GPU (Figure 1).

Visidon’s face analysis algorithms, face detection and tracking run on the CPU, while age detection, gender, smile, and eyeglass detection run on the NPU. It’s important to note that while some NPUs are “tacked on” to the CPU and GPU in an SoC, the VS680’s NPU and AI pipeline are seamlessly embedded so they operate in a trusted execution environment, which is critical for security and privacy (Figure 2).

For enhanced video conferencing, Visidon offers depth estimation and portrait segmentation (Figure 3) to separate the main subject in the frame and to replace the background. These networks run on the NPU. Additionally, face detection selects the focus point in the frame and in-depth map for the bokeh effect. Mask refinement improves the depth map and segmentation mask accuracy. And finally, effect rendering creates background blur, background replacement, and playful effects. These networks run on the CPU and GPU. Visidon offers both monocular and stereo camera depth estimate. Visidon bokeh, compared to segmentation-based counterparts, provides a more natural look with fewer object cut-outs and fewer visible errors. It also features high stability, with no flickering of body parts.

Figure 3: Visidon’s depth estimation, segmentation and bokeh separates the main object in the frame and replace the background (top left – input, top right – bokeh with gradual blur)

In depth sensing applications, depth estimation algorithms allow for realistic depth and distance calculations of object in images, while segmentation can be used to extract objects and subjects from image context. For example, if a person is sitting on a chair where the ground and the chair are at the same distance to the camera as the person is, segmentation can distinguish semantically what is a person, what is a chair and what is a ground.

Synaptics’ VS680 is supported by its SyNAP toolkit which enables customers to optimize ML/AI models to take full advantage of the VS680’s capabilities (Figure 4). These models include those for video, vision, and audio AI. Working with VS680 has been easy and has not required the use any other designated platform tools which is often a downside when working with special hardware.

Synaptics and Visidon will showcase their solution at the IBC show 2022 (https://show.ibc.org/) during September 9-12 in Amsterdam. (SF)

This blog first appeared in Display Daily

Sharmin Farah is a Marketing Manager at Visidon with a mission to grow the company’s brand in the video enhancement area through different market segments. She has a passion and expertise in digital marketing and communications but also experience in project management, branding, public relations, and R&D.

About the Author